Cost-per-unit is the most devastating flawed paradigm TOC has challenged. From my experience many managers, certainly most certified accountants, are aware of some of the potential distortions. One needs to examine several situations to get the full impact of the distortion.

Cost-per-unit supports a simple process for decision-making, and this process is “the book” that managers believe they should follow. It is difficult to blame a manager for making decisions based on cost-per-unit. There are many more ramifications to the blind acceptance of cost-per-unit, like the concept of “efficiency” on which most performance measurements are based. TOC logically proves how those “efficient” performance measurements force loyal employees to take damaging actions to the organization.

Does Throughput Accounting offer a valid “book” replacing the flawed concept of cost-per-unit?

Hint: Yes, but some critical developments are required.

The P&Q is a famous example, originally used by Dr. Goldratt, which proves that cost-per-unit gives a wrong answer to the question: how much money the company in the example is able to make?

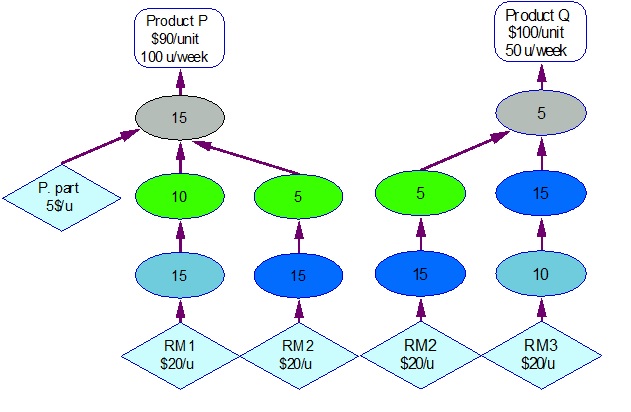

Every colored ellipse in the picture represents a resource that is available 8 hours a day, five days a week. The chart represents the routing for two products: P and Q. The numbers in the colored ellipses represent the time-per-part in minutes. The weekly fixed expenses are $6,000.

The first mistake is ignoring the possible of lack of capacity. The Blue resource is actually a bottleneck – preventing the full production of all the required 100 units of P and 50 units of Q every week. The obvious required decision is:

What current market should be given up?

The regular cost accounting principles lead us to give up part of the P sales, because a unit of P yields lower price than Q, requires more materials and also longer work time.

This is the second common mistake as when you check what happens when some of the Q sales are given up, instead of P units, you realize that the latter choice is the better decision!

The reason is that as the Blue resource is the only resource that lacks capacity, and the Q product requires much more time from the Blue than from the rest of the resources that have idle capacity.

The simple and effective way for demonstrating the reasons behind what seems like a big surprise is to calculate for every product the ratio of T/CU – throughput (selling price minus the material cost) divided by the time required from the capacity constraint. In this case a unit of P yields T of ($90-$45) divided by 15 minutes = $3 per minute of Resource B capacity. Product Q yields only (100-40)/30 = $2 per minute of B.

This is quite a proof that cost-per-unit distorts decisions. It is NOT a proof that T/CU is always right. According to regular cost-accounting principles, once the recognition that the Blue is a bottleneck is realized, the normal result is: a loss of $300 per week. When the T/CU rule is followed then the result is: positive profit of $300 per week.

I claim that the T/CU is a flawed concept!

I still claim that the concept of throughput, together with operating-expenses and investment, is a major breakthrough for business decision-making!

The above statement about T/CU has been already presented by Dr. Alan Barnard in TOCICO and in a subsequent paper. Dr. Barnard showed that when there are more than one constraint T/CU yields wrong answers. I wish to explain the full ramifications of that on decisions that are taken prior to the emergence of new capacity constraints.

The logic behind T/CU is based on two critical assumptions:

- There is ONE active capacity constraint and only one.

- Comment: Active capacity constraints means if we’d get a little more capacity the bottom-line would go up and when you waste a little of that capacity the bottom-line will definitely go down.

- The decision at hand is relatively small, so it would NOT cause new constraints to appear.

Some observations of reality:

Most organizations are NOT constrained by their internal capacity! We should note two different situations:

- The market demand is clearly lower than the load on the weakest-link.

- While one, or even several, resources are loaded to 100% of their available capacity, the organization has means to get enough fast additional capacity for a certain price (delta(OE)), like overtime, extra shifts, temporary workers or outsourcing. In this situation the lack of capacity does not limit the generation of T and profit, and thus the capacity is not the constraint.

The second critical assumption, that the decision considered is small, means the T/CU should NOT be used for the vast majority of the marketing and sales new initiatives! This is because most marketing and sales moves could easily cause extra load that penetrates into the protective capacity of one, or more, resources, creating interactive constraints that disrupt the reliable delivery. Every company using promotions is familiar with the effects of running out of capacity and what happens to the delivery of the products that not part of the promotion.

That said, it is possible that there are enough means to quickly elevate the capacity of the overloaded resources, but certainly both operations and financial managers should be well prepared for that situation.

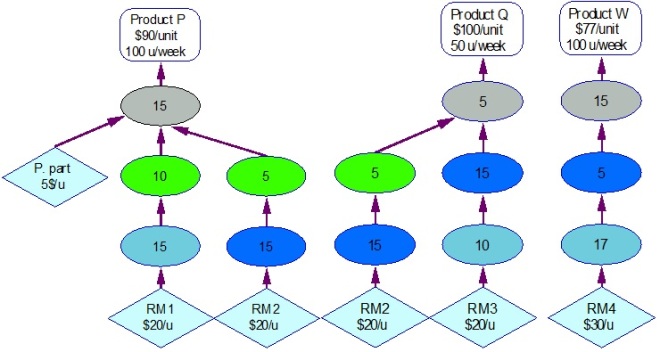

Let’s view a somewhat different P&Q problem:

Suppose that the management considers adding an additional product W, without adding more capacity. The new product W uses the Blue resource capacity, but relatively little.

Question is: What are the ramifications?

If before having Product W the company had the Blue resource as a bottleneck (loaded to 125%), now three resources are overloaded. The most loaded resource now is the Light-Blue (154%), then the Blue (146%) and also the Grey reaches 135%.

So, according to which resource the T/CU should guide us?

Finding the “optimized solution” does not follow any T/CU guidance. The new product seems great from the Blue machine perspective. Product W ratio of T/time-by-the-Blue = (77-30)/5=9.4, which the best of all products. If we should go all the way according to this T/CU – we should sell all the W demand and part of the demand for P (we have the blue capacity for 46 units of P) and none of the Q. That demand would generate a profit of $770, which is more than the $300 profit without the W product.

Is it the best profit we can get?

However, when you consider the ratio T/CU considering the Light-blue resource then Product W is the lowest with only $2.76 per minute of Light-Blue.

Techniques of linear programming can be used in the ultra simple example above. As only sales of complete items are realistic the result of gaining profit of $1,719 can be reached by selling 97 units of P, 23 units of Q and 42 units of W. This is considerably higher than without Product W, but also much higher than relying on the T/CU of the Blue resource!

As already mentioned, the above conclusions have already been dealt with by Dr. Barnard. The emphasis on decision-making that could cause the emergence of constraints is something we have to be able to analyze at the decision-making stage.

Until now we have respected the capacity limitations as is. In reality we usually have some more flexibility. When there are easy and fast means to increase capacity, for instance paying the operators for overtime, then a whole new avenue is opened for assessing the worthiness of adding new products and new market segments. Even when the extra capacity is expensive – in many cases the impact on the bottom-line is highly positive.

The non-linear behavior of capacity (there is a previous post dealing with it) has to be viewed as a huge opportunity to drive the profits up by product-mix decisions and by the use of additional capacity (defined as the “capacity buffer”). Looking towards the longer time-frame could lead to superior strategy planning, understanding the worth of every market segment and using the capacity buffer to face the fluctuations of the demand. This is the essence of Throughput Economics, an expansion of Throughput Accounting using the practical combination of intuition and hard-analysis as the full replacement of the flawed parts of cost accounting.

T/CU is useless when the use of the capacity buffer, the means for quick temporary capacity, is possible. When relatively large decisions are considered the use of T/CU leads to wrong decisions similar to the use of cost-per-unit.

Goldratt told me: “I have a problem with Throughput Accounting. People expect me to give them a number and I cannot give them a number”. He meant the T/CU number is too often irrelevant and distorting. I do not have a number, but I believe I have an answer. Read my paper on a special page in this blog entitled “TOC Economics: Top Management Decision Support”. It appears on the menu at the left corner.

And making the production questions Eli mentions above more problematic is the likelihood that some customers buy a selection of Ps, Qs and Ws, on demand which is uncertain. Stopping making as many Qs may lose a customer, unless an attractive alternative supplier is available. And, oops, let’s hope the alternative supplier isn’t too attractive, for example with the capacity to produce not only Ps, Qs and Ws but Rs, Ss, Ts and Us, as well.

It is a hard (but fun) life. About the time you think you simplified it, you discover that it is more interconnected than you first thought.

LikeLike

That is my “marmalade” situation Henry!!

I am fussy about the marmalade I buy. The local supermarket that we used to use decided not to stock it any longer – it probably, by itself, didnt generate enough T/m of shelf space. The problem was that when I wanted some more marmalade, I took the whole shop to another store, so they lost significantly more T than just the odd jar of marmalade!

LikeLike

This is a wonderful example. The flaw is assuming that the sales of every product are independent from other sales.

Problem is: there is no practical way to reliably capture within the computerized system the partial dependencies between variables. Just to illustrate the problem: Ian might not have moved to another store if the alternative store is too far away, or lack another item that is important to Ian. More, how can the store “know” how many customers, like Ian, sees that marmalade as a key item and don’t like to compromise by buying another brand?

In my vision for Throughput Economics (TOC Economics) I combine intuitive inputs with hard analysis. So, the question how many customers might move to another store because of the lack of specific slow-mover, has to be raised. Not necessarily the right number would be used – but it’d sharpen the intuition of key people to achieve satisfactory results. This is much more than the current situation of treating numbers as if they represent the full picture.

LikeLike

> profit of $1,719 can be reached by selling 97 units of P, 23 units of Q and 42 units of W.

Better: sell 28 P, 50 Q, and 87 W for profit of $2349.

LikeLike

Yes Josh, if it is realistic to sell exactly these quantities, and if you can manage interactive constraints in operations (!), then this is a superior result, even to what is mentioned in Chapter 6 of the book: 35 P, 49 Q and 81 W, which yields $2,322. We can try to optimize the results, but we need the complex use of linear programming, and if we’d have setup time, we’d need even more complex algorithm. The realistic problem of handling interactive constraints has to be solved in reality.

The key point is that T/CU should be used in such a case. Take several realistic scenarios, such that can be sold, and check what is best. What I wanted to highlight is that adding the W product makes a very substantial change, and even the first check yields profit that is way above what can be achieved by just two products.

LikeLike

> if it is realistic to sell exactly these quantities, and if you can manage interactive constraints in operations (!), then this is a superior result

You don’t need to sell exactly those quantities. Say you try for 28 P, 50 Q, and 87 W. Even if you fall short by up to 10 units of anything, you’ll still do better than $1,719. For example, selling 5 fewer P and 5 fewer W still yields a profit of $1,889 (45*23 + 60*50 + 47*77 – 6000). See https://play.golang.org/p/wDlHD4Tyknr

LikeLike

Typo: My example calculation should have 47*82 (5 fewer W), not 47*77.

LikeLike