The power of today computers opens a new way to assess the impact of variety of ideas on the performance of an organization that takes into account both complexity and uncertainty. The need stems from the common view of organizations and their links to the environment as inherently complex, while also exposed to high uncertainty. Thus every decision, sensible as it may seem at the time, could easily lead to very negative results.

One of the pillars of TOC is the axiom/belief that every organization is inherently simple. Practically it means that only few variables truly limit the performance of the organization even under significant uncertainty.

The use of simulations could bridge the gap between seemingly complex system and reaching relatively simple rules to manage it well. In other words, it can and should be used to reveal the simplicity. Uncovering the simple rules is especially valuable in times of change, no matter whether the change is the result of an internal initiative or from an external event.

Simulations can be used to achieve two different objectives:

- Providing the understanding of the cause-and-effect in certain situations and the impact of uncertainty on these situations.

The understanding is achieved through a series of simulations of a chosen well-defined environment that shows the significant difference in results between various decisions. An effective educational simulator should prove that there is a clear cause-and-effect flow that leads from a decision to the result.

Self discovery of ideas and concepts is a special optional subset of educational simulator. It requires the ability to make many different decisions as long as the logic behind the actual results is clear.

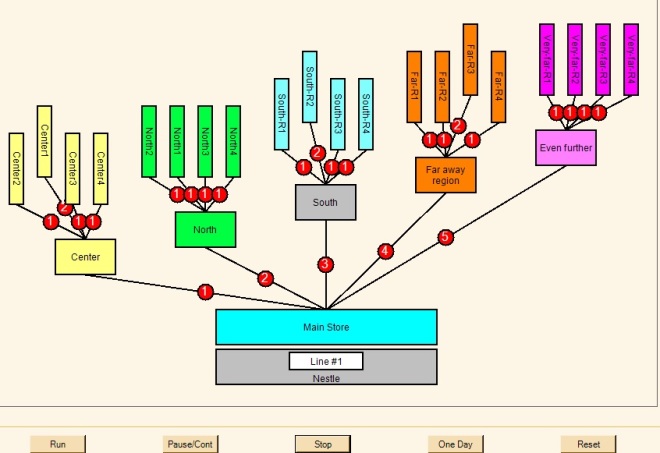

A simple educational simulator for distribution systems

- Supporting hard decisions by simulating a specific environment in detail, letting the user dictate a variety of parameters that represent different alternatives and get a reliable picture of the spread of results. The challenge is to be able to model the environment in a way that it keeps the basic complexity, and represents well all the key variables that truly impact the performance.

I’ve started my career in TOC by creating a computer game (The ‘OPT Game’) that aimed to “teach managers how to think”, and then continued to develop a variety of simulations. While most of the simulators were for TOC education, I had developed two simulations for specific environments aiming at answering specific managerial questions.

The power of today computers is such that developing wide-scope simulators, which can be adjusted to various environments and eventually support very complex decisions, is absolutely valid. My experience shows that the basic library of functions of such simulators should be developed from scratch as using general modules provided by others slows the simulations to a degree that they are unusable. Managers have to take many of their decisions very fast. This means the supporting information have to be readily accessible. Being fast is one of the critical necessary conditions for wide-scope simulations to serve as an effective decision support tool.

Dr. Alan Barnard, one of the most known TOC experts, is also the creator of a full supply chain simulator. He defines the managerial need first to be convinced that the new general TOC policies behind the flow of products would work truly well. But, there is also a need to determine the right parameters, like the appropriate buffers and the replenishment times, and this can be achieved by a simulation.

There is a huge variety of other types of decisions that a good wide-scope simulator could support. The basic capability of a simulation is to depict a flow, like the flow of products through the supply chain, the flow of materials through manufacturing, the flow of projects, or the flow of money going in and out. The simulated flow is characterized by its nodes, policies and uncertainty. In order to be able to support decisions there is a need to simulate several flows that interact with each other. Only when the product flow, order flow, money flow and capacity flow (purchasing capacity) are simulated together the essence of the holistic business can be captured. The simulator should allow easy introduction of new ideas, like new products that compete with existing products, to be simulated fast enough. The emerged platform for ‘what-if’ scenarios is then open for checking the impact of the idea on the bottom line.

For many decisions the inherent simplicity, as argued by Dr. Goldratt, provides the ability to predict well enough the impact of a proposed change on the bottom line. Throughput Economics defines the process of checking new ideas by calculating the pessimistic and optimistic impact of that idea on the bottom line of the organization. It relies on being able to come up with good enough calculations on the total impact on sales and on capacity consumption to predict the resulting delta-T minus delta-OE.

However, sometimes the organization faces events or ideas with wider ramifications, like impacting lead-times or being exposed to the ‘domino effect’ where a certain random mishap causes a sequence of mishaps, so more sophisticated ways to support decisions have to be in place. Such extra complications of predicting the full potential ramifications of new ideas can be solved by simulating the situation with and without the changes due to the new ideas. The simulation is the ultimate aid when straight-forward calculations are too complex.

Suppose a relatively big company, with several manufacturing sites in various locations throughout the globe, plus its transportation lines, clients and suppliers, is simulated. All the key flows, including the money transactions and their timing, are part of the simulation. This provides the infrastructure where various ideas regarding the market, operations, engineering and supply can be carefully reviewed and given predicted impact on the net profit. When new products are introduced determining the initial level of the stock in the supply chain is tough because of its high reliance on forecast. Every decision should be tested according to both the pessimistic and optimistic assumptions, and thus management can make a sensible decision that considers several extreme future market behaviors, looking for the decision that minimizes downsides and still captures potential high gains.

Such a simulation can be of great help when an external event happens that messes the usual conduct of the organization. For instance, suppose one of the suppliers is hit by a tsunami. While there is enough inventory for the next four weeks, the need is to find alternatives as soon as possible and also realize the potential damage of every alternative taken. Checking this kind of ‘what-if’ scenarios is easy to do with such a simulator revealing the real financial impact of every alternative.

Other big areas that could use large simulation to check various ideas are the airline and shipping businesses. The key problem in operating transportation is not just the capacity of every vehicle, but also its exact location at a specific time. Any delay or breakdown creates a domino effect on the other missions and resources. Checking the economic desirability of opening a new line has to include the possible impact of such a domino effect. Of course, the exploitation of the vehicles, assuming that they are the constraint, should be a target for checking various scenarios through simulations. Checking various options for the dynamic pricing policies, known as yield-management, could be enlightening as well.

While the benefits can be high indeed one should be aware of the limitations. Simulations are based on assumptions, which open the way to manipulations or just failures. Let’s distinguish between two different categories of causes for failure.

- Bugs and mistakes in the given parameters. These are failures within the simulation software or wrong inputs representing the key parameters requested by the simulation.

- Failure of the modeling to capture the true reality. It is impossible to simulate reality as is. There are too many parameters to capture. So, we need to simplify the reality and focus only on the parameters that have, or might have at certain circumstances, significant impact on the performance. For instance, it is crazy to model the detailed behavior of every single human resource. However, we might need to capture the behavior of large groups of people, such as market segments and groups of suppliers.

Modeling the stochastic behavior of different markets, specific resources and suppliers is another challenge. When the actual stochastic function is unknown there is a tendency to use the common mathematical functions like the Normal Distribution, Beta the Poisson, even when they don’t match to the specific reality.

So, simulations should be subject to careful check. The first big test should be depicting the current state. Does it really show the current behavior? As there should be enough intuition and data to compare the simulated results with the current state results, this is a critical milestone in the use of simulations for decision support. In most cases there should be at first deviations that occur because of bugs and flawed input. Once the simulation seems robust enough more careful tests should be done to ensure its ability to predict the future performance under certain assumptions.

So, while there is a lot to be careful with simulations, there is even more to be gained from by understanding better the impact of uncertainty and by that enhance the performance of the organization.

I loved the keynote by Dr. Bernard during the Berlin TOCICO conference. Using software simulations to show how such a policy like “allocation per retailer” is disastrous is just brilliant. While I fully agree that computers and software today are capable of creating comprehensive simulations and quickly answering questions, as you noted, these can only give answers to questions that were asked of them. For example, if no one would formally realize that “allocation per retailer” is a policy, and that such a policy might be changed, then no simulation would ever find out if such a policy was good or bad for the supply chain. Recognizing the different variables that potentially can be simulated, is still a human thinking endeavor. Computers naturally cannot come up with the questions by themselves. But computers are great at providing answers quickly, should we pose it with the correct questions, and this is a relatively new capability that has been commoditized like never before.

LikeLike